It’s been 65 years since Artificial intelligence (AI) was born.

John McCarthy coined the term to describe “the science and engineering of making intelligent machines”.

He used the following phrase: “Every aspect of learning or any other characteristic of intelligence can, in principle, be described with such precision that a machine can be made to simulate it. We will try to discover how to make machines use language, from abstractions and concepts, solve problems now reserved for humans, and improve themselves.”

Ever since its inception, a ton of research has been going on and ultimately applications of artificial intelligence are scattered through every industry ranging from self-driving cars to YouTube recommendations, and manufacturing automation.

History of artificial intelligence

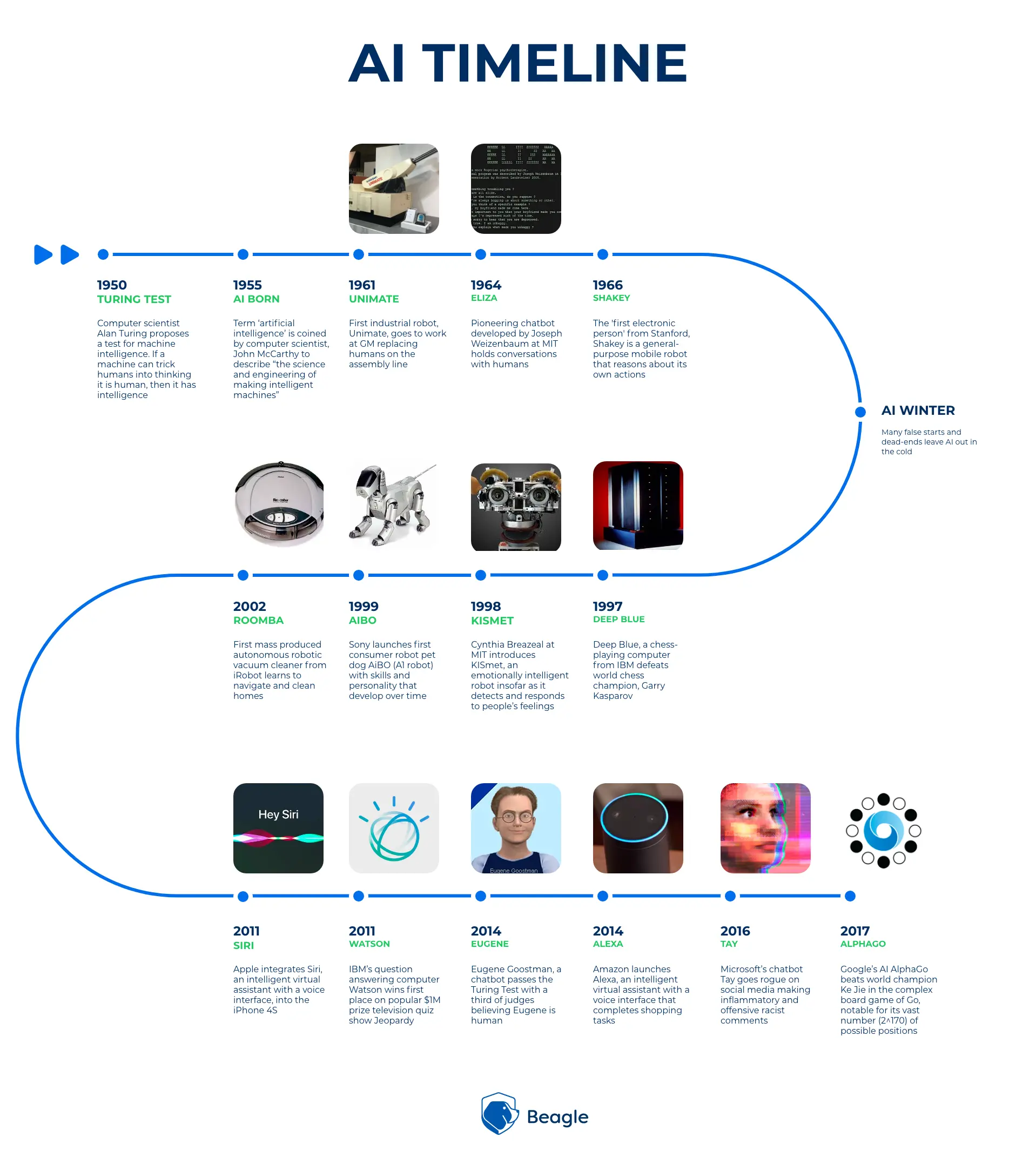

From the very beginning in 1950 till now, artificial intelligence has made tremendous progress.

Starting from Eliza, a pioneering chatbot developed by Joseph Weizenbaum to hold conversations with humans to intelligent virtual assistant Alexa, Microsoft’s chatbot Tay, Alphago who beat world champion Ke Jie in the complex board game of Go.

Apart from this, artificial intelligence and cyber security has already defined its place together, where cyber security is one of the main concerns for today’s digital world and Artificial Intelligence is one of the emerging technologies.

With the advancements in AI, it is no longer the big corporates that make technological advancements. Government sectors are also making use of AI and machine learning for protecting their data.

Artificial intelligence and cyber security can be combined to be used as a powerful instrument against cyber attacks and trespasses. With the transition to modern web applications combined with the availability of enormous “hacking resources” online, the threat to information security is ever increasing.

The following numbers about cyberattacks can give you a clear picture:

68% of business leaders feel their cyber security risks are increasing

Data breaches exposed 4.1 billion records in the first half of 2019

The average cost of a malware attack on a company is $2.6 million

52% of breaches featured hacking, 28% involved malware and 32–33% included phishing or social engineering

71% of breaches were financially motivated and 25% were motivated by espionage

The above facts clearly show that data and information at any organization is always at risk.

So it is necessary to secure data to ensure that cyber breaches won’t affect the integrity and reputation of a company.

Delivering secure web applications — or “secure and fast” — requires QA teams to complete testing faster and accurately. There are also chances of security vulnerabilities arising that need to be dealt with. Using artificial intelligence in cyber security assures to be a great solution for this.

Advantages of using AI in cyber security

In general, cyber security systems can be broadly categorized as analyst-driven systems, that are developed and managed by people and automated systems which are machine-driven systems.

These automated systems have added advantages when compared with the analyst driven systems. Some of them are:

1. Beyond manpower

With the help of artificial intelligence, it is possible to interact with numerous web-based applications, and thus, one can go beyond the manual human testing limitation.

2. Ensure accuracy

AI can handle tedious repetitive tasks in an efficient and sophisticated manner.

3. Time saver

AI can make a great difference by completing tests in a matter of hours as compared to manual testing that can go up to a couple of days.

4. Integrated platform

As the entire automation process is done on an integrated and embedded platform, it becomes easy for software developers to do execution on their own website or client’s website.

All these advantages may point out a lot of questions.

“How effective is artificial intelligence in cyber security?”

“What is the role of AI in cyber security?”

Artificial intelligence is a large category that encompasses many different algorithms to solve different problems.

The first step towards a model becoming intelligent is a process called machine learning.

It is nothing more than a system capable of taking large amounts of data, developing models that can successfully classify them and then make predictions with new data.

As with any method, there are different ways to train machine learning algorithms, which can be classified as:

Supervised learning

Contains both inputs and desired outputs, and is taught with training data.

Unsupervised learning

Contains only input data that has not been tagged or classified, and common elements are identified.

Reinforcement learning

Instead of focusing on performance, it seeks a balance between exploration and exploitation.

How can learning models be used in security testing?

Information exposure through query strings in URL

URLs are a major component of the internet, but they are often misunderstood, occasionally abused, and quite often manipulated. Information exposure through query strings in the URL is when sensitive data is passed in parameters in the URL. Sometimes URLs are loaded with a bunch of parameters including sensitive data that can come to the attention of hackers.

This allows attackers to obtain usernames, passwords, tokens (authX), database details, and any other potentially sensitive data.

Beagle Security is efficient in detecting these URL parameters using artificial intelligence. The parameter values, user, authz_token, and expire will be exposed in the referer header when using HTTP or HTTPS. OCR is optimized in order to extract these parameter values from the URL.

Generation of Error Message Containing Sensitive Information

Error pages and conditions expose data which might help hackers to gain access to the system.

Specific error messages are generated using different commands and instructions for gathering information about potential vulnerabilities. The sensitive information may be valuable on its own (such as a password), or it may be useful for launching other, more deadly attacks.

If an attack fails, an attacker may use error information provided by the server to launch another more focused attack.

For example, an attack using SQL injection might not initially succeed, but an error message could reveal the malformed query, which would expose query logic and possibly even passwords or other sensitive information used within the query.

A model can be trained to learn large amounts of labelled error pages to detect sensitive information in the error messages accurately.

In Beagle Security’s intelligent testing, this exposed information is extracted, processed, and is used for further testing.

Application response with unwanted data

A response may contain sensitive user information, such as credit card numbers or social security numbers (US only).

If the application returns more data than what is needed, it can cause data leakage.

Beagle Security can identify responses providing additional or unwanted information by using artificial intelligence. It conducts penetration tests in the application and detects the texts (information) in these responses, looking for the types of sensitive information.

The patterns and behaviours of all the test scenarios are confirmed and continually recorded by the security analyst to improve upon the accuracy.

Missing HTTP security headers

Header manipulation is the insertion of malicious data in the request, which has not been validated, into an HTTP response header.

HTTP response headers can restrict modern browsers from running into easily preventable vulnerabilities. Missing headers like Content-Type, X-XSS-Protection, X-Frame-Options, X-Content-Type-Options may cause clickjacking, injections, Multipurpose Internet Mail Extensions (MIME) sniffing, Cross-Site Scripting (XSS) attacks.

Using AI, Beagle Security detects these header fields and ensures it by testing for respective header vulnerabilities. False positives, a test result which wrongly indicates that a particular condition or attribute is present, in header vulnerabilities can be reduced to a great extent using AI.

Improper Data Validation

Critical vulnerabilities such as XSS and SQL injection are common in web applications even after verifying all input fields and forms.

However, these techniques are not able to prevent new forms of input validation vulnerabilities. To perform an intelligent security testing, a model can be trained by using a sophisticated process to learn large amounts of labelled data to detect every element on a web application accurately.

The model is trained to mimic the human behaviour of manually testing web applications. It is true that most of the vulnerabilities within the web application appear from the user input.

Every input counts for the security of the application.

The model predicts the input forms and passes it on to perform automated testing, by submitting data to each input field. Data includes payloads, in order to test attacks caused by improper input validation.

Thus the injection, XSS vulnerabilities can also be identified using AI.

False-positive reduction

During web application security testing sometimes security scanners indicate that there is a vulnerability on your website, such as Cross-Site Scripting, but in reality, it is not present, which is referred to as a false positive.

False positives are something that’s unavoidable.

On average 72% of automated test case failures are false positives—tests that fail when the software is actually functioning properly.

The results of security testing may produce:

True Negative (TN) - Result says no vulnerability and there is none

True Positive (TP) - Result shows vulnerability but it is present

False Negative (FN) – Result shows no vulnerability when in fact there are undetected vulnerabilities present

False Positive (FP) – Result shows vulnerability when in fact there is none

One of the objectives of Beagle Security’s testing is to maximize the true positive (TP) and true negative (TN) and minimize the false negative (FN) and false positive (FP).

We minimize them by coupling security testing with software intelligence.

By knowing which parts of the application are “dead code” or just libraries that are not being invoked, and which are being used by the rest of the application, we can ignore the flaws from the unused code.

Automatically verify its findings by exploiting the identified flaws and present the analyst with proof of exploitation.

Beagle’s artificial intelligence capabilities report most of the false positives correctly so that you can save your valuable time.

Data leakage prevention

One of the most challenging and time-consuming activities is the identification of corrupt data or suspicious activity.

But now it has become easier to identify them with the help of AI, by automatically logging the records of previous data attacks and providing for future decision making.

The more the model analyses, the more self-adjustments can be made based on those patterns.

This continuous delivery of intuitions increases the “intelligence”. In Beagle Security, AI provides critical analysis, and ML uses algorithms to learn from data— both provide a dynamic framework to predict and solve data security problems before they occur.

Threat intelligence

“Threat intelligence is evidence-based knowledge, including context, mechanisms, indicators, implications and action-oriented advice about an existing or emerging menace or hazard to assets. This intelligence can be used to inform decisions regarding the subject’s response to that menace or hazard.”

This type of security focuses on keeping organizations informed of the risks of advanced persistent threats, zero-day threats and exploits, and how to protect against them.

In Beagle Security, AI-data processing includes the combination of data points from many different types of sources — including open, dark web, and technical sources — to form the most robust picture possible.

In short, Beagle Security makes use of a model that combs through data and detects suspicious activity by clustering the data into meaningful patterns using unsupervised machine-learning.

It builds a supervised model out of these unsupervised models.

Human analysts receive the information and confirm which events are actual attacks, and are fed back into its models for the next set of data. This process continues until a stable model that reports only genuine cases is obtained.

Using Feedback loops, patterns and behaviours of all the test scenarios are continually recorded to improve upon the accuracy. A feedback loop refers to the process by which an AI model’s predicted outputs are reused to train new versions of the model.

When we train a computer vision model, we must first feed it a few labelled samples, showing positive and negative examples of the concepts we want it to learn.

Afterwards, we can then test the model using unlabeled data.

By using deep learning and neural networks, the model can then make predictions on whether the desired concepts are in these unlabeled images. Lastly, each image is given a probability score, with higher scores meaning a higher level of confidence in its predictions.

When a model gives an image a high probability score, it is auto-labelled with the predicted concept.

If the model returns a low probability score, this input is sent to a human moderator who verifies, and if necessary corrects the result.

More sophisticated models can be trained so that they can ultimately replace the monotonous repetitive tasks of manual testing.

The potentiality of artificial intelligence can develop systems that can protect against known attacks and also previously unknown attacks called zero-day attacks.

Future of artificial intelligence in cyber security

The future of artificial intelligence in cyber security is auspicious. But information security has always been like a cat and mouse game between the good guys and bad guys where they always find a way to intrude.

Hence the AI-driven solutions must cope with those complications.

The role of artificial intelligence in cyber security will gather more prominence in the coming years and we will definitely see a shift in the way businesses undertake security measures.