Voice-controlled smart devices have been a novel invention and they remarkably simplify many day-to-day tasks. But they are constantly under the radar for security risks ever since their introduction a few years ago.

And there’s a new very unconventional security risk that probably no one would have thought could be possible. A team of cybersecurity researchers have found a way to take over Google Home, Amazon Alexa, Apple Siri and other similar IoT devices from a few metres to hundreds of metres away with the help of laser light.

Yes, you heard it right. Laser light to control a voice-controlled device. How strange does that sound?

The researchers published a paper dubbed ‘Light Commands’ which dives deep into the procedure they carried out to exploit the vulnerability. The hack relies on a vulnerability in the MEMS (micro electro-mechanical systems) microphones embedded in voice-controlled systems that unintentionally responds to light as if it were sound.

So, are only voice-controlled smart devices susceptible to this risk? Not really.

Smart voice assistants in your phones, tablets, and other smart devices are all vulnerable to this new light-based signal injection attack.

The impact of such an attack depends on the level of access your voice assistants have over other connected devices. As demonstrated by the researchers, the light commands attack can hijack other smart systems attached to the targeted voice-controlled assistants.

Controlling smart home switches, opening smart garage doors, making online purchases and remotely unlocking and starting certain vehicles can all be achieved by exploiting this vulnerability.

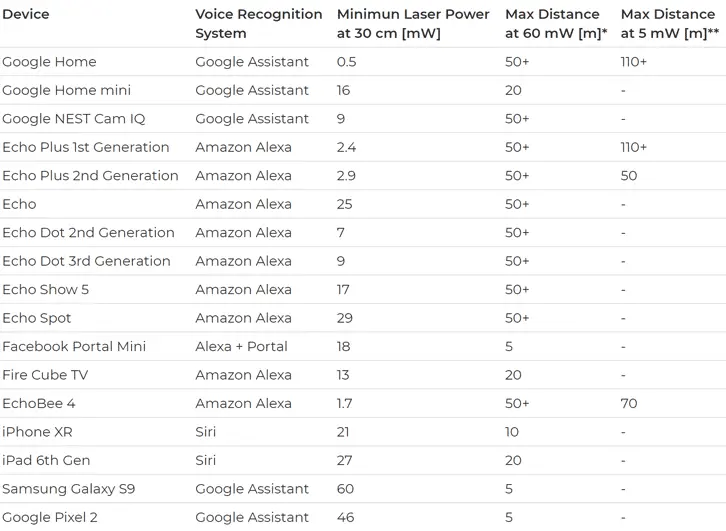

The researchers were able to command a Google Home to open a garage door by injecting the device with laser light. They were also able to hijack a voice assistant more than 350 feet away by focusing the lasers using a telephoto lens. The researchers also tested the vulnerability on a variety of smartphone devices that have voice assistants including the Samsung Galaxy S9, the Google Pixel 2 and the iPhone XR.

The attack isn’t easy to accomplish since attackers need a direct line of sight to the target device. The laser light is also visible and the targeted smart device will issue a vocal confirmation of the commands.

The majority of microphones on smart devices need to be redesigned to solve this problem. For now, the best solution is to keep the line of sight of your voice assistant devices physically blocked from the outside.

Internet-connected devices have had a long history of security vulnerabilities. With a large number of IoT devices hitting the market year after year, consumers need to remain cautious about their security.